Outage RFO for September 6th/7th, 2019

Friday September 6th, the Selfnet core network was completely offline for a few hours. Getting the network back online took a few hours, but also led us down the wrong path. Because of that, more (but also shorter) outages followed. So what happened? Here’s the Reason For Outage (RFO).

Volunteer Work

Selfnet is powered by 100% volunteer work. Friday morning in early September is probably the worst time for a big outage. Students are often not on campus, or when they are, they’re preparing for exams. This of course also applies to the Selfnet folks. Early September is also a very busy time, because students move in and out of the dormitories, so there’s a lot to do at the currently six Selfnet offices.

That means: an outage during those times is especially painful, because there is already a lot of work and few of us are here. So if you would like to join us, learn, experiment and build cool stuff with us, please don’t hesitate to visit or email us!

Timeline

So here is what happend, in chronological order.

Thursday, around 9pm

We have been using Juniper EX4500 switches for our network core since we bought them in 2012. We also have some EX4600 which is a newer platform. They seem to have fewer bugs, better CPU, etc. and so we decided to replace the remaining EX4500. On Thursday, the two core switches “vaih-core-1” and “vaih-core-3” were replaced. We already have a few EX4600 and they run smoothly with the features we’re using, so we didn’t expect any issues.

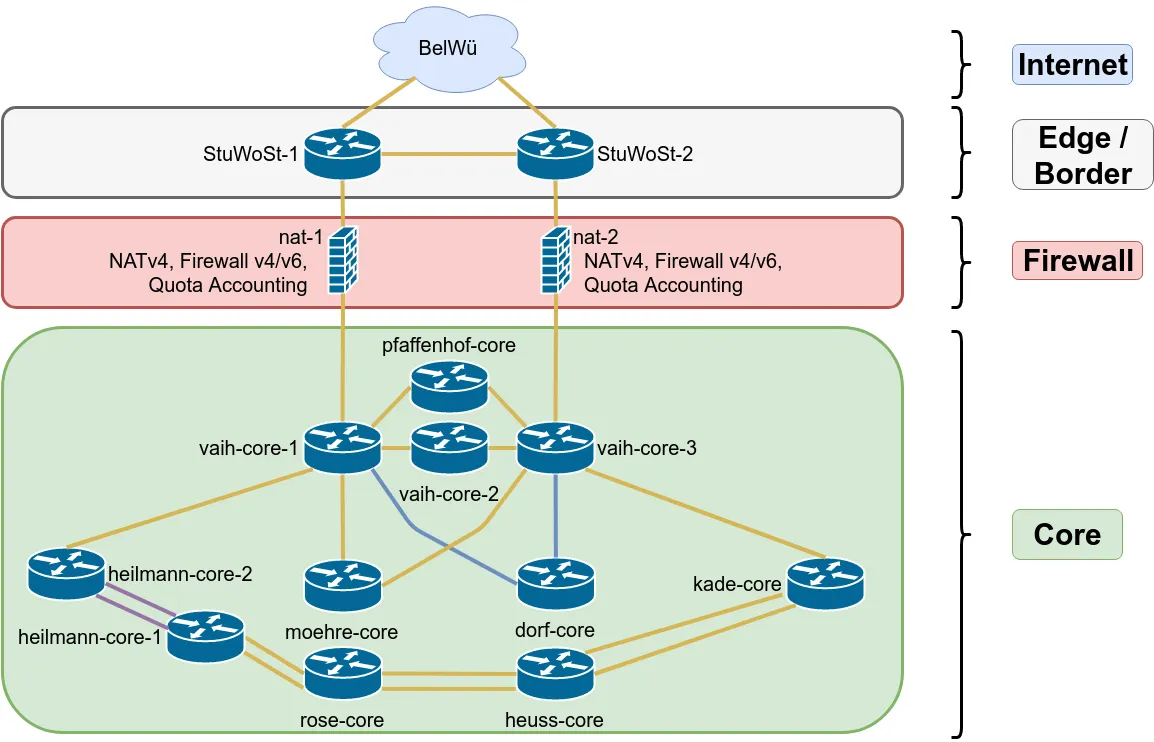

Selfnet Core Topology

As you can guess from the topology, everything should be redundant. Even the access switches are usually interconnected, so we can replace the core switches one by one, without any outages.

After the replacement, we didn’t see any issues, everything was running fine as usual.

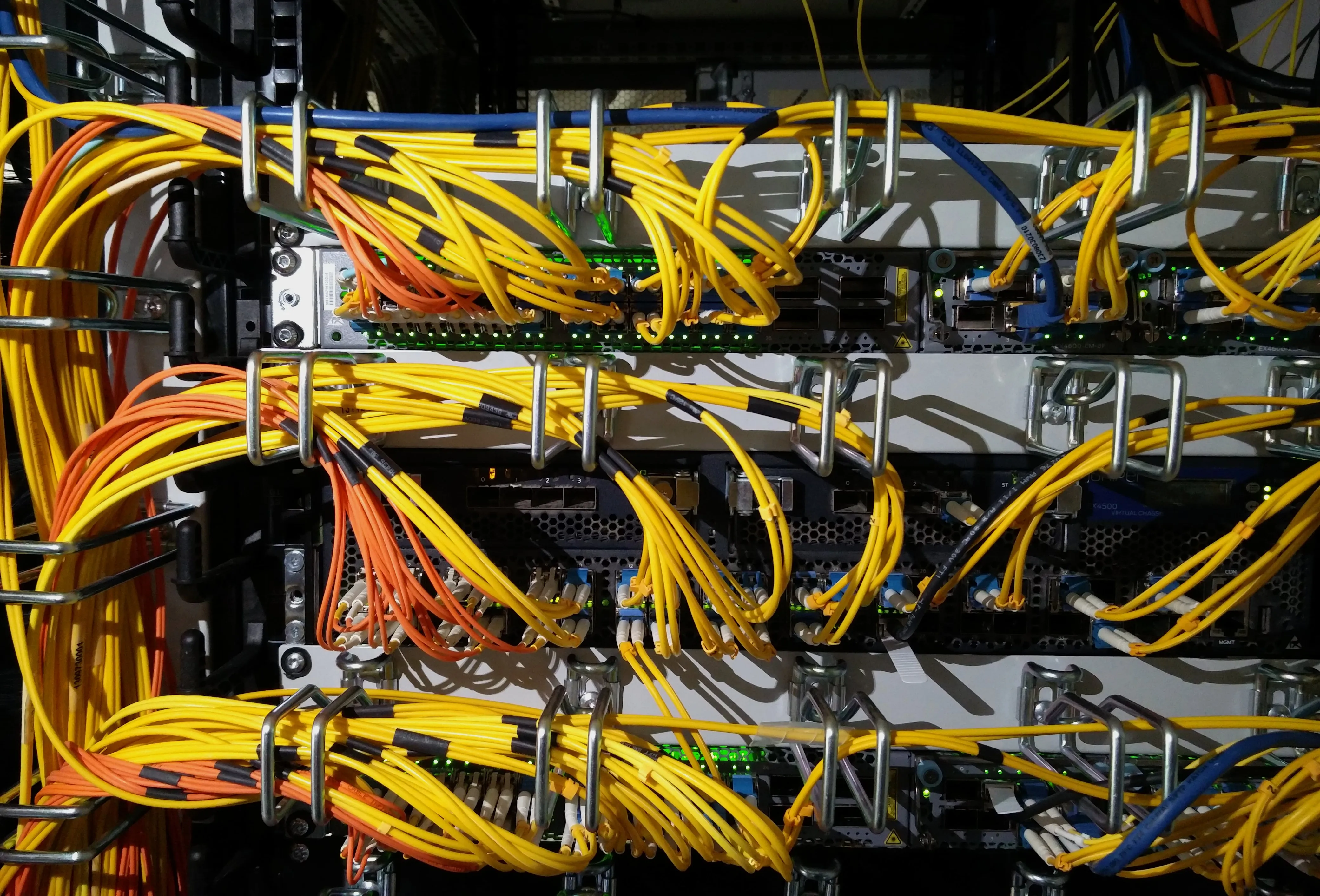

vaih-core-1/2/3 (top to bottom: EX4600, EX4500, EX4600)

Friday, 2:18am

Selfnet is suddenly offline. A few of us are still awake and try to find out what has gone wrong. As you can seen in the topology, there is enough redundancy and this should never happen.

Friday, 3:26am

There seems to be an issue with one of the NAT/firewall machines, traceroutes are stuck there and they don’t seem to be forwarding traffic. nat-1 gets rebooted, but won’t boot because of hardware issues. When nat-1 is finally back, the connection tracking daemon “conntrackd” doesn’t start and needs manual intervention.

After some more debugging, the guys who were still awake gave up and went to bed.

Friday, around 6am

More people wake up. Since Selfnet is still offline, email, Jabber and IRC-chat aren’t working. We’re setting up an emergency communication IRC channel on another IRC network and create a CryptPad to collect all the information we have and coordinate work.

Friday, 8:57am

Someone is back on site. Routing looks fine, but traceroutes end at nat-2. The forwarding between core and edge routers seems to be broken, but routing still uses this path, as OSPF packets get though. The firewall nat-2 won’t let anyone log in, probably because of high load or forwarding issues. As a last resort, nat-2 gets rebooted, too. Somehow this solves the problem.

We notice that the core switches have logged “DDoS violations”. That could mean a storm of OSPF packets because of link flaps or duplicate packets introduced by forwarding issues.

We’re not sure yet, why the forwarding stopped working, but let OSPF packets through, but for now Selfnet is back in business. Since the mailserver is back online, we’re receiveing well over 100 emails from members with questions and complaints. So we start answering them, telling everyone that we had an outage, that it’s working again, that we’re still investigating the root cause and therefore might have another interruption.

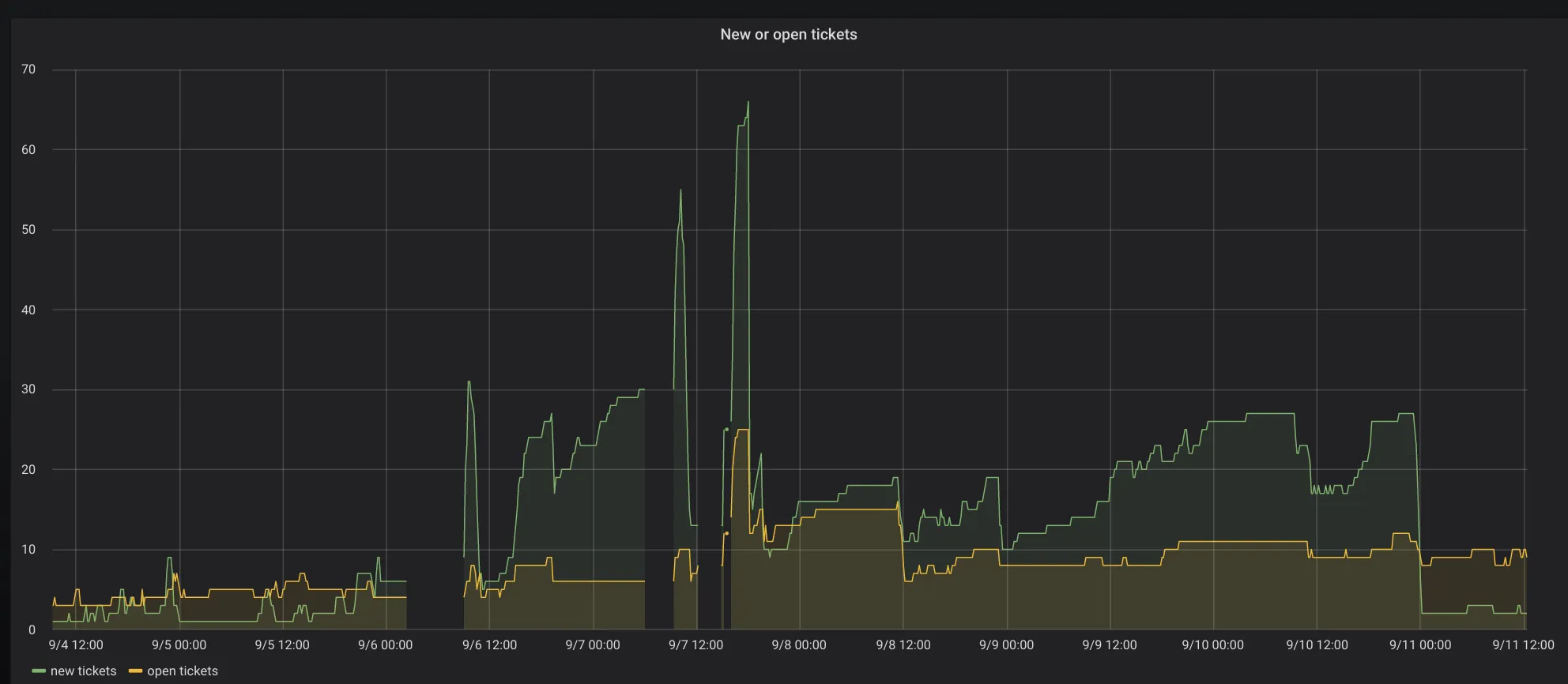

Visualisation of new or open tickets in our ticket system (emails sent to support@selfnet.de) being rapidly answered by volunteering members.

Friday, between 9am and 10am

Still debugging the outage. The logs show recoverable memory issues (i.e. not hardware faults). So that would explain why a reboot solved the problem.

nat-1 gets a run of “memtest” to check the RAM banks for any physical damage, but it shows no errors.

Friday, 11:45am

Currently both IPv6 and IPv4 gets routed via nat-2. We usually split the traffic by address family, so nat-1 handles IPv4 and nat-2 handles IPv6 by default. They also sync their connection tracking states, so a failover won’t damage any open connections and nobody will notice.

We’re reverting back to splitting the traffic and moving IPv4 back to nat-1.

Friday, 2:20pm

Another outage. This time, only IPv4 is affected. nat-1 shows high CPU load, which is almost exclusively SoftIRQs. We analyzed this in a previous blog post (german) and the level of SoftIRQs is pretty much an indicator of the amount of traffic (packets per second) going through the firewall. This is either a hefty DDoS attack, a problem of nat-1 or packets bouncing back and forth between the routers.

For some reason, another reboot of nat-1 resolves the problem.

Friday, 10pm

The same problem now happens with IPv6 via nat-2. Again, SoftIRQs are at 80% (which, if you consider the impact of hyperthreading means about 100% hardware utilization).

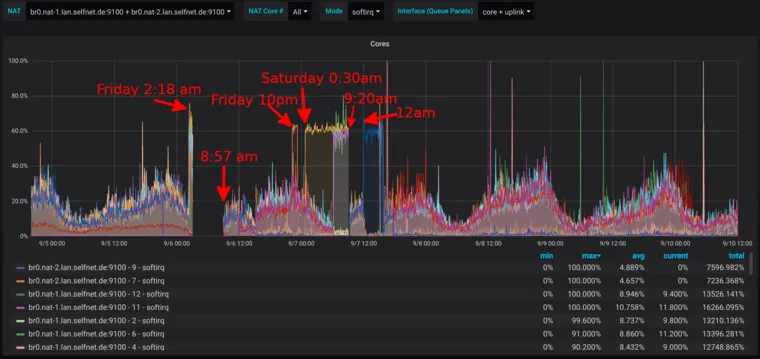

Screenshot of the Grafana SoftIRQ CPU usage monitoring

We already know that for some reason, a reboot can fix this, so we’re rebooting nat-2, to get traffic flowing for the users, although this makes it harder for us to debug.

Traffic for both IPv4 and IPv6 is moved back to nat-2 now.

Saturday, 0:30am

SoftIRQs on both nat-1 and nat-2 are rising again, now both firewalls are again in trouble and the network gets unstable.

Saturday, 6am

Again, the firewalls collapse and the network is down.

Unfortunately, nobody is there to reboot the boxes, and since everything is down, we can’t reboot the boxes remotely.

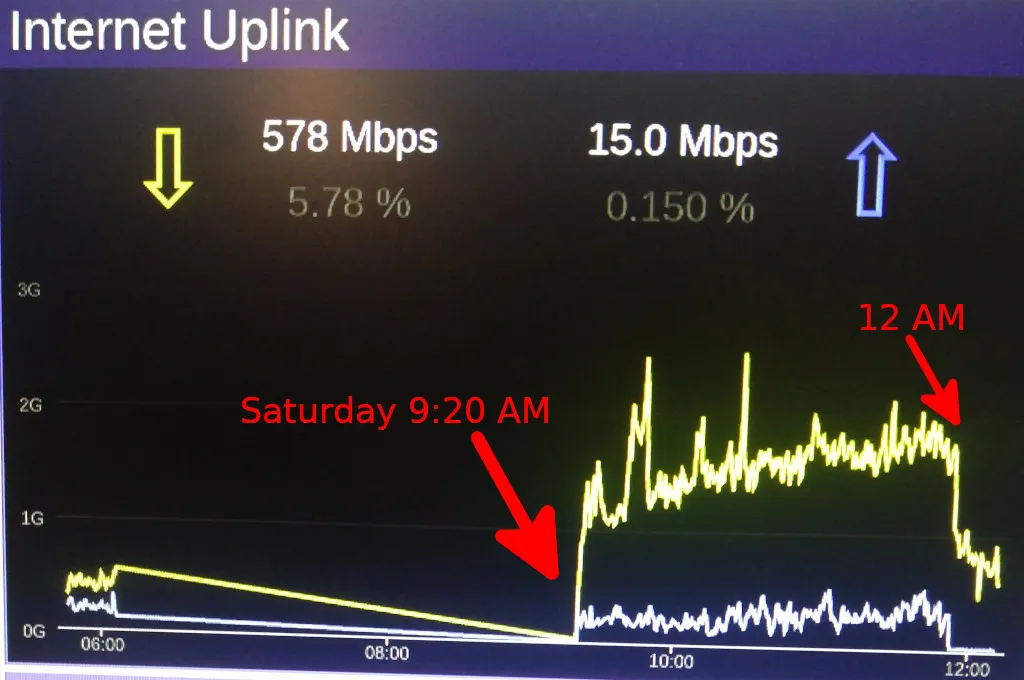

Saturday, 9:20am

Another reboot fixes the problem again.

Uplink graph showing traffic increase when the problem is fixed (again) around 9:20 AM and another sharp drop in traffic volume at 12 AM.

Saturday, 12am

The issue is back again. We notice that both new core routers have high CPU utilization. The “Packet Forwarding Engine Manager”, fxpc is using up all the resources. The other EX4600 in our network have a different (older) software version than the two new ones, so we decide that this might be another JunOS bug that was introduced in the new firmware. Sadly, this happens a lot with network vendor firmware. We decide to downgrade to the known good release that’s running on the other EX4600s. During the downgrade, we see crashes and kernel dumps. The installation then fails due to filesystem erros. A complete reformat of the disks and a fresh installation finally gets them running again.

After both boxes have been downgraded, CPU utilization is back to normal, and fxpc is in the lower single-digit CPU percentage. Also, the OSPF issues are gone, the firewalls are working normal again, and we haven’t seen this issue since.

So what happened?

It’s hard to tell for sure, but we think that the Juniper boxes have a bug that’s causing forwarding and OSPF issues. If you look at the SoftIRQ monitoring, it was always one CPU core that was overloaded during the outages. Usually the packets are load-balanced on all the CPU cores. The decision, which core to use is made on a per-flow basis, which means that a packets source and destination IP address and port and the protocol number are hashed. The hash sum is then used to point at one CPU core. If all packets have the same flow hash, they are all handled by the same CPU core.

Therefore we think that there had to be some kind of forwarding issue, where certain packets bounced back and forth between firewalls and core switches, until the link and single-core performance was saturated with handling those packets. Sadly, we focused on fixing this issue as quick as possible and didn’t collect any packet dumps to analyze this issue further.

What now?

For now, the problem seems to be solved after the firmware downgrade. At the moment there is still an ongoing service request where Juniper is trying to resolve the problem - because although it is not really noticable - both new EX4600 core switches still have severe CPU utilisation spikes which does not look healthy.

But also (as we already noted in the NAT and firewall blog post) the firewall servers are a bit outdated. There are plans to replace them with new gear. We currently have demo equipment with modern AMD Epyc CPUs and 4x 10GbE NICs and will replace the old boxes soon.

AMD Epyc Demo Equipment for testing

There are also ideas to change the topology, so only user traffic passes the firewalls and server traffic goes to a separate DMZ.